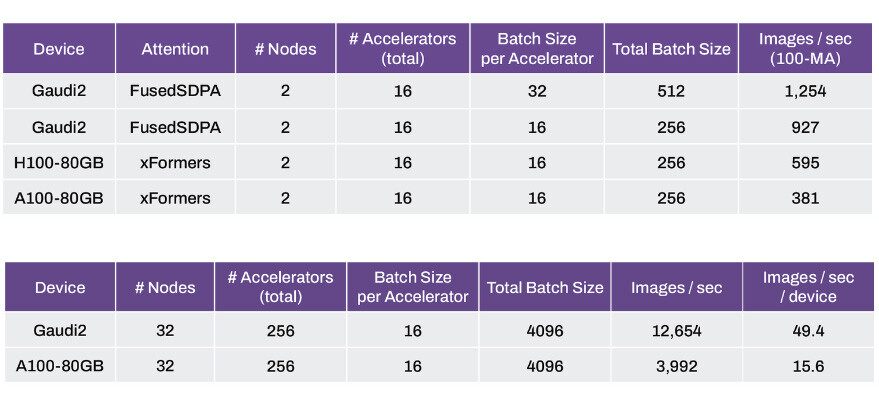

Stability AI, the creators of the popular Stable Diffusion generative AI model, have conducted initial performance tests on Stable Diffusion 3 using well-known data-center AI GPUs such as the NVIDIA H100 "Hopper" 80 GB, A100 "Ampere" 80 GB, and Intel's Gaudi2 96 GB accelerator. Unlike the H100, which is a super-scalar CUDA+Tensor core GPU, the Gaudi2 is specifically designed to accelerate generative AI and LLMs. Stability AI has shared its performance results in a blog post, revealing that the Intel Gaudi2 96 GB shows approximately 56% better performance than the H100 80 GB.

With 2 nodes, 16 accelerators, and a consistent batch size of 16 per accelerator (256 in total), the Intel Gaudi2 array can produce 927 images per second, compared to 595 images for the H100 array and 381 images per second for the A100 array, while maintaining the same number of accelerators and nodes. Scaling up to 32 nodes and 256 accelerators, or a batch size of 16 per accelerator (total batch size of 4,096), the Gaudi2 array achieves 12,654 images per second, or 49.4 images per second per device, in contrast to 3,992 images per second or 15.6 images per second per device for the older A100 "Ampere" array. It is important to note that these results were obtained using the base PyTorch; Stability AI acknowledges that with TensorRT optimization, A100 chips can generate images up to 40% faster than Gaudi2. The company believes that with further optimization, the Gaudi2 will be able to surpass the A100 in performance.

Stability AI intends to integrate the Gaudi2 into Stability Cloud, as it credits the faster interconnect and larger 96 GB memory for making the Intel chips competitive in the AI market.